What Must Remain Human?

Don't start with automation. Start here.

Hi there,

Welcome back to Untangled. It’s written by me, Charley Johnson, and valued by members like you. This week, I’m sharing a process any organization can use to reconfigure human-machine workflow. Because the key question for companies and organizations adopting AI isn’t “what can we automate,” it’s How do we design work that takes advantage of what machines do well, while protecting what makes us irreplaceably human?

As always, please send me feedback on today’s post by replying to this email. I read and respond to every note.

🏡 Untangled HQ

This was a fun week y’all:

I kicked off Stewarding Complexity with Aarn Wennekers. The next event, ”From Control to Stewardship,” is live.

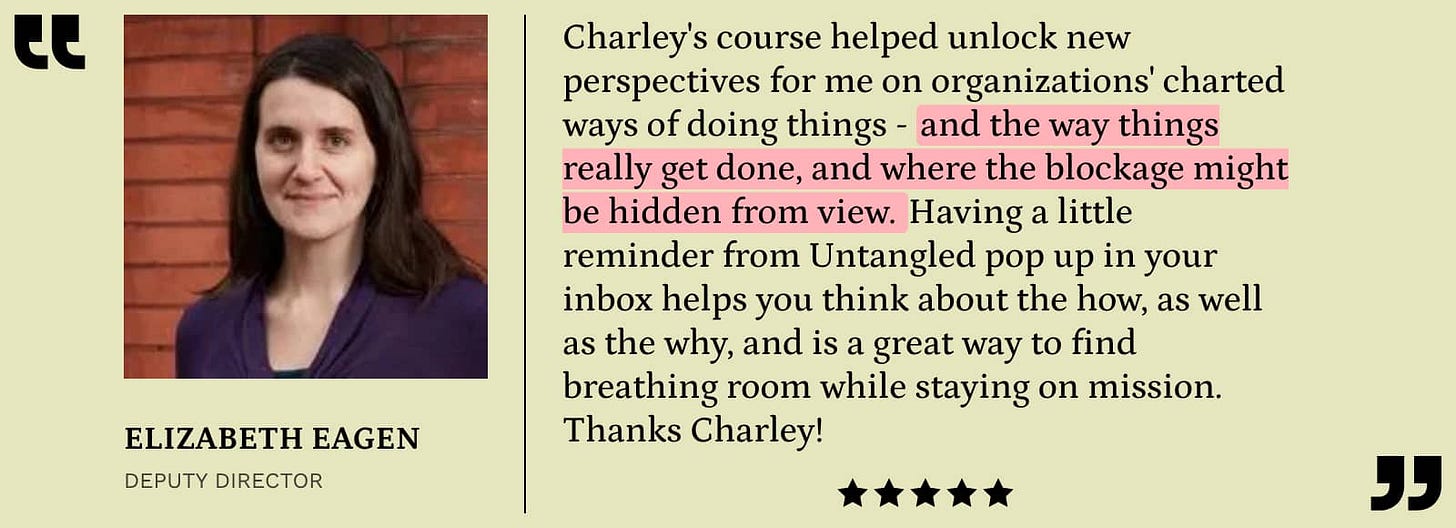

I launched three email courses. In each email course, I teach frameworks and tools that I wish I had when I led digital transformation at USAID or multi-stakeholder alliances against mis-and-disinformation. Here’s what one early adopter had to say:

Each email course breaks big, messy topics into small, digestible, actionable steps and practices. Let me know what you think!

⚒️ What Must Remain Human?

Microsoft, Meta, Shopify, Coinbase, and a number of other companies have mandated the use of AI. Just last week, Meta tied employee performance to AI usage. What could go possibly go wrong?

Mandates start the conversation with the wrong question: what can we automate? It puts AI at the center of the design process.

The better starting point? What must remain human! The key question these companies — and frankly, anyone introducing AI into a workflow — is: How do we redesign work so machines increase human capacity without eroding human judgment, accountability, or learning over time?

So let’s design a new process for organizations grappling with the division of labor between humans and machines, shall we?

Step 0: Are you sure?

The current paradigm of scale-at-all-costs generative AI means that adoption and use is complicit with the environmental costs of data centers, the psycho-emotional costs to data labelers, and the uncompensated theft of the world’s data. These might directly conflict with your organization’s values.

More practically, it means that you’re inviting into key organizational processes a tool that — based on how its designed — offers agreeable, sycophantic answers, and makes stuff up. If edge cases pose a big risk to the work (e.g. ‘hallucinated’ legal citations in court filings, medical diagnosis failures, etc.), ‘just say no.’

Do you still want to continue? Okay, onwards.

Step 1: Identify What Must Remain Human

Start by asking what cannot be delegated. My conversation with Evan Ratliff showed that we underestimate what makes humans unique. Machines cannot make meaning. They cannot exercise judgment or discernment. They cannot reframe problems or redefine goals. They can’t imagine anything that hasn’t come before. Nor can they draw from implicit knowledge that hasn’t been turned into data. Right, as I argued in “The Intelligence of a Hunch,” most of what we know remains unconscious until circumstances—surprise, confusion, deliberate reflection—require us to bring it into explicit awareness.

The point is, we need to identify where judgment calls are being made.

Map these explicitly:

Meaning-making (Check out Vaughn Tan’s work on meaning making)

Judgment under uncertainty

Framing problems and redefining goals

Ethical discernment and accountability

Imagination, hypothesis-generation, reframing

Here’s a li’l design rule: If a task requires deciding why, whether, or for whom, it stays human-led.

If you’re playing along and want to make this practical, draft a short list of non-delegable human capacities your program must protect and strengthen.

Step 2: Identify What Machines Can and Should Do Well

Once you’re clear on what must stay human, you can ask what machines actually do well—without tricking yourself about their limitations.

Machines perform best under bounded execution: low discretion, high consistency, explicit success criteria. They’re excellent at pattern detection, summarization, retrieval, and optimization within fixed constraints.

These tasks share key characteristics: inputs are explicit, context is bounded, success criteria are clear, edge cases don’t introduce material risk, and judgment isn’t required. The process can be articulated step by step.

This is why machines are good at writing code. If you were on the Internet this week, you probably saw Matt Shumer’s piece “Something Big is Happening.” 75 million people did! But as Helen Edwards, co-founder of The Artificiality Institute put it,

“Shumer’s whole argument runs on one story: his personal experience watching AI get good at writing code. Software is the domain where AI has the most structural advantages — clear success criteria, automated testing, the ability to verify its own output. Generalizing from code to ALL knowledge work - every decision, every conflict, every ambiguity - is like watching a calculator outperform humans at arithmetic and concluding it will soon outperform them at parenting. The world most of us work in is messy, ambiguous, and context-dependent.”

Hear, hear! This is why we have to clearly separate what machines do well from what must remain human -- and, in hybrid tasks, reconfigure workflows account for new roles, risks, decision points, accountability, uncertainty, and potential loss. Otherwise, we risk making the same ridiculous error Shumer made and extrapolate from bounded, low discretion, high consistency work to ALL work. Now immediately share this post so that 75 million people read this version instead! Please and thank you!

Which brings us to the step most organizations skip.

Step 3: Account for Risk, Accountability Shifts, and Loss

Not everything that can be automated should be.

Amazon’s algorithmic hiring tool is the canonical example. The recruitment tool was trained on the previous 10 years of employment data, and technology was —and is — a male-dominated sector. So the algorithm assigned higher scores to men. The error wasn’t in the code—it was in treating hiring as a pattern-matching problem suitable for automation, when it’s actually a judgment task embedded in historical inequity.

Before designing workflows, classify tasks by what changes if a machine does the work.

Work that introduces new risks if automated:

Risk scoring or predictions tied to a decision, punishment, or exclusion

Automated prioritization of people or communities

Outputs treated as “objective” despite value-laden inputs

Work that shifts accountability if automated:

Triage and escalation decisions

Approval or denial of access, resources, or rights

“Recommendations” that quietly become defaults

Work that causes long-term loss if automated:

Erosion of human instinct or professional judgment

Loss of contextual understanding

Decline in learning-through-practice

Short-term efficiency at the expense of adaptive capacity

Amazon’s system didn’t “fail” by its own metrics—it efficiently processed résumés. But it introduced systematic bias and would have eroded recruiters’ ability to recognize talent that didn’t fit historical patterns.

Here’s an important design warning: Most automation failures happen when these categories are mistaken for machine-suitable work.

Step 4: Assign Roles

Make AI a tool or instrument, not an agent. Remember, there is no such thing as a “fully autonomous agent.”

This is where language matters. When you say “the AI decides” or “the algorithm recommends,” you’re already ceding authority. The machine doesn’t decide anything. It produces outputs based on patterns in training data. Humans decide what those outputs mean and what to do with them.

So we can’t let roles blur — we need to define responsibilities.

For machines, specify:

What it produces (signals, patterns, options—not decisions)

What it cannot decide or finalize

Where it must surface uncertainty, edge cases, or anomalies

For humans, specify:

Who interprets outputs and why

Who makes decisions

Who is accountable

Where humans can override, question, or reframe outputs

Consider the Dutch childcare benefits scandal (the “toeslagenaffaire”), where an automated fraud detection system flagged thousands of families for investigation. The system produced risk scores, but caseworkers treated them as verdicts rather than signals requiring interpretation. Families—disproportionately immigrants and people with dual nationalities—were ordered to repay tens of thousands of euros, some losing their homes. The fundamental error: treating the machine’s output as a decision rather than as information requiring human judgment.

Playing along? Create clear role definitions that prevent judgment and responsibility from drifting to the machine.

Step 5: Reconfigure the Workflow

Now you must design how judgment flows through the system. Instead of asking “What gets automated?”, you’re asking:

Where should machines enter the process?

Where must humans interpret before anything moves forward?

Where must humans retain final say?

Where should friction, delay, or pause be deliberately added?

Yes, deliberately added. Speed and efficiency isn’t a virtue. Sometimes you need a human to sit with a decision overnight. Sometimes you need two people to review before proceeding. Sometimes you need space for doubt.

Research on sepsis detection algorithms shows this can work when designed carefully. Epic’s sepsis prediction model flags potential cases, but the workflow requires a nurse to review the patient directly and a physician to confirm before treatment begins. The machine MIGHT expand perception in this case (the jury is still out) but it should never interpret context, make the decision, or bear accountability -- that’s the job of nurses and doctors.

AI becomes a tool in a workflow. Not an actor. Not an authority. Not a decision-maker.

Okay, now create a redesigned workflow diagram showing human checkpoints, interpretation moments, override authority, and escalation paths.

Step 6: Make Accountability Explicit

Every workflow is also a governance structure.

Michigan’s unemployment fraud detection system illustrates what happens when accountability isn’t clearly mapped from the start.

Between 2013 and 2015, Michigan deployed an automated system called MiDAS (Michigan Integrated Data Automated System) to detect unemployment fraud. The algorithm flagged claimants based on pattern matching, and the state began aggressively pursuing repayment—often automatically seizing tax refunds, garnishing wages, and imposing penalties of up to 400% of the alleged overpayment. Over three years, the system falsely accused approximately 40,000 people of fraud. People lost their homes. Some filed for bankruptcy.

Yet, accountability was nowhere to be found. The fundamental problem wasn’t just that the algorithm was inaccurate. It was that the state had deployed a system that made consequential decisions about people’s lives without clearly establishing who was accountable at each step:

Who was responsible for verifying the algorithm’s accuracy before deployment?

Who made the decision to automatically impose penalties without human review?

Who was accountable when someone was wrongly accused?

Where could people appeal when the machine was wrong?

Who bore responsibility for the harm caused?

The answers were either unclear or nonexistent. The workflow had been designed around efficiency—automating fraud detection and penalty imposition—without mapping accountability alongside it.

Once the workflow is designed, accountability must be named:

Who is accountable at each decision point?

Where does responsibility sit legally, ethically, and socially?

Which decisions are irreversible—and must remain human?

What happens when the machine is wrong?

Changing the workflow changes accountability, whether you acknowledge it or not -- and if you can’t answer these questions, you’re not ready.

So, create an accountability map that travels alongside the workflow.

Step 7: Redesign Decision Support and Work Tempo

You can have a well designed workflow on paper, but if the decision support system encourages people to click “approve” without thinking, everything falls apart.

Ask:

Does the interface support critical thinking or passive acceptance?

Are alternatives visible or collapsed into a single output?

Is uncertainty legible or hidden?

Does the workflow reward speed or quality of judgment?

And think about tempo. The COMPAS recidivism risk assessment tool, used in criminal sentencing decisions, produces scores in seconds. But the decision of whether someone should receive a longer sentence or be denied bail isn’t a task that should take seconds. Time is a design variable. Pace encodes values. Take a beat, or two! Require written justifications for decisions and ensure the decision-maker can explain the limitations and uncertainties embedded in the machines output.

Go back through your workflow, and design requirements for interfaces, tools, and timing that protect human judgment.

Step 8: Install Feedback Loops That Protect Human Judgment

Human–machine workflows are not static. They are dynamic, and must be actively maintained.

Here’s what I’ve seen happen: organizations implement responsible AI practices, and then six months later, people have learned to game the system or ignore the safeguards. Not because they’re bad actors, but because the machine nudged them to.

Monitor what the system teaches people to do:

Are humans checking less?

Are decisions narrowing?

Is dissent declining?

Are values being silently standardized?

To mitigate what might be lost, consider:

Periodic human-only reviews

Red-team interpretation sessions

Rotation of decision authority

Reassessment of non-delegable work

Sunset or pause tools that distort judgment

As the workflow evolves, pay attention to what the system teaches people to do.

The Real Work

Most organizations automate first and ask questions later. We've already seen companies (Klarna, Duolingo, etc.) get excited about AI, fire thousands of people, regret the decision, and then try to re-hire!

Companies and organizations can’t afford to center the question, ‘what can we automate,’ We need to start with human judgment, accountability, and meaning—and only then asks where machines can help.

✨ Before you go: 3 ways I can help

Advising: I help clients develop AI strategies that serve their future vision, craft policies that honor their values amid hard tradeoffs, and translate those ideas into lived organizational practice.

Courses & Trainings: Everything you and your team need to cut through the tech-hype and implement strategies that catalyze true systems change.

1:1 Leadership Coaching: I can help you facilitate change — in yourself, your organization, and the system you work within.

Thanks Charley, this is such a useful post! For me point 6 is key, as without individual responsibility and agency what are we even doing our jobs for in the first place?