🤖 AI isn’t ‘hallucinating.’ We are.

Why 'hallucination' is a problematic term (hint - it's not just because it anthropomorphizes the technology!) and what to do about it.

Hi there, and welcome back to Untangled, a newsletter and podcast about technology, people, and power. May has been a fun month:

In the latest special issue of Untangled, I asked the uncomfortable question: uh, what even is ‘technology’? Then I spent 3,000 words trying to answer it. 🙃

I offered a behind-the-scenes look at Untangled HQ. I shared what I’ve learned, how Untangled has grown, and where I hope it will go in the future. Plus I extended a personal offer, from me to you.

For paid subscribers, I posed the question, “What even is ‘information’?” and dug into the problems of metaphors. Then I analyzed an alternative metaphor for AI offered by Ted Chiang in The New Yorker: management consulting firms.

This month I decided to answer the important question: is AI ‘hallucinating’ or are we? If you enjoy the essay or found the pieces above valuable, sign up for the paid edition. Your contributions make a real difference!

Now, on to the show.

If you’ve read an article about ChatGPT of late, you might have noticed something odd: the word ‘hallucinate’ is everywhere. The origin of the word is (h)allucinari, to wander in mind, and Dictionary.com defines it this way: “a sensory experience of something that does not exist outside the mind.” Now, ChatGPT doesn’t have a mind, so to say it ‘hallucinates’ is anthropomorphizing the technology, which as I’ve written before, is a big problem.

‘Hallucinate’ is the wrong word for another important reason: it implies an aberration; a mistake of some kind, as if it isn’t supposed to make things up. But that’s actually exactly what generative models do — given a bunch of words, the model probabilistically makes up the next word in that sequence. Presuming that AI models are making a mistake when they’re actually doing what they’re supposed to do has profound implications for how we think about accountability for harm in this context. Let’s dig in.

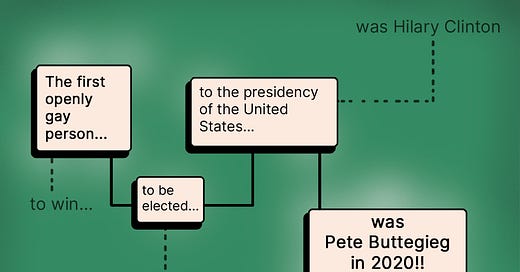

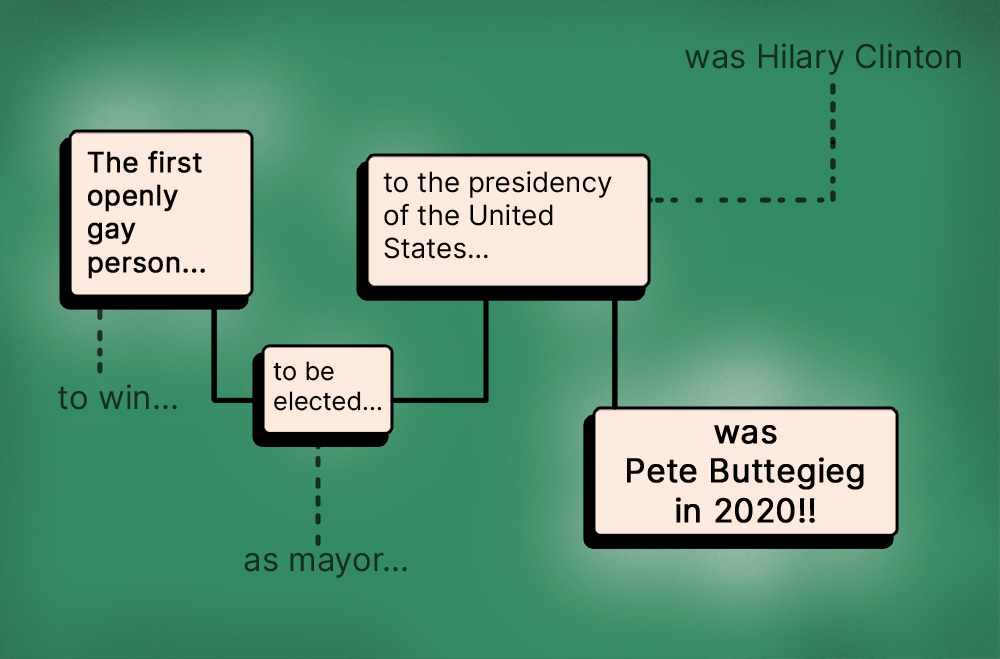

In March, tech journalist Casey Newton asked Google’s Bard to give him some fun facts about the gay rights movement. Bard responded in part by saying that the first openly gay person elected to the presidency of the United States was Pete Buttigieg in 2020. Congratulations, Pete! This response was referred to by many as a ‘hallucination’ — as if the response wasn’t justified by its training data. But since Bard was largely trained on data from the internet, it likely includes a lot of sequences where the words “gay,” “president,” “United States,” “2020,” and “Pete Buttigieg” are close to one another. So on some level, claiming that Buttigieg was the first openly gay president isn’t all that surprising — it is a plausible response from a probabilistic model.

Now, this example didn’t lead to real-world harm, but who or what should be held accountable when it does? Helen Nissenbaum, a professor of information sciences, explains that we’re quick to “blame the computer” because we anthropomorphize it in ways we wouldn’t with other inanimate objects. Nissenbaum was writing in 1995 about clunky computers, and this problem has become much much worse in the intervening years. As Nissenbaum wrote then, “Here, the computer serves as a stopgap for something elusive, the one who is, or should be, accountable.” Today, the notion that AI is hallucinating serves as such a stopgap.

Keep reading with a 7-day free trial

Subscribe to Untangled with Charley Johnson to keep reading this post and get 7 days of free access to the full post archives.