Beyond ‘minimizing harms’

Algorithmic bias is still a huge problem — but where do those problems start?

Hi there, and welcome back to Untangled, a newsletter and podcast about technology, people, and power. If you don’t yet have a New Year’s Resolution, might I suggest, ‘make Untangled go viral?’ Just share this essay with a few friends and consider your work for 2023 done.

In December, I:

Published an essay on identification technologies, classification, systems, and power and then followed it up with an audio essay on the same topic.

Turned the Untangled Primer into an ongoing project and considered a new theme: technologies encode politics.

Published a newsletter on ChatGPT3, and how it’s rather Trumpy.

Two last things before we get into it:

A few of you have reached out to ask how you can support me and all of the work I put into Untangled. Stay tuned for an announcement on that very topic next week!

If this essay prompts a question for you, email me with it this week, and I’ll do my best to answer it in the audio essay.

Now on to the show!

Remember playing the game whac-a-mole as a kid? You hit the mole, it returns to its hole, and then, in time, it pops back up, and on and on you go. That’s sometimes how it feels in tech these days: we identify a harm, take a whack at it, the harm goes away for a time, and then eventually, it returns.

What if, instead, we could alter the systems that allow these harms to flourish? How do we transition from ‘minimizing harms’ to instead, transforming the algorithmic systems that continue propagating those harms? Let’s dig in.

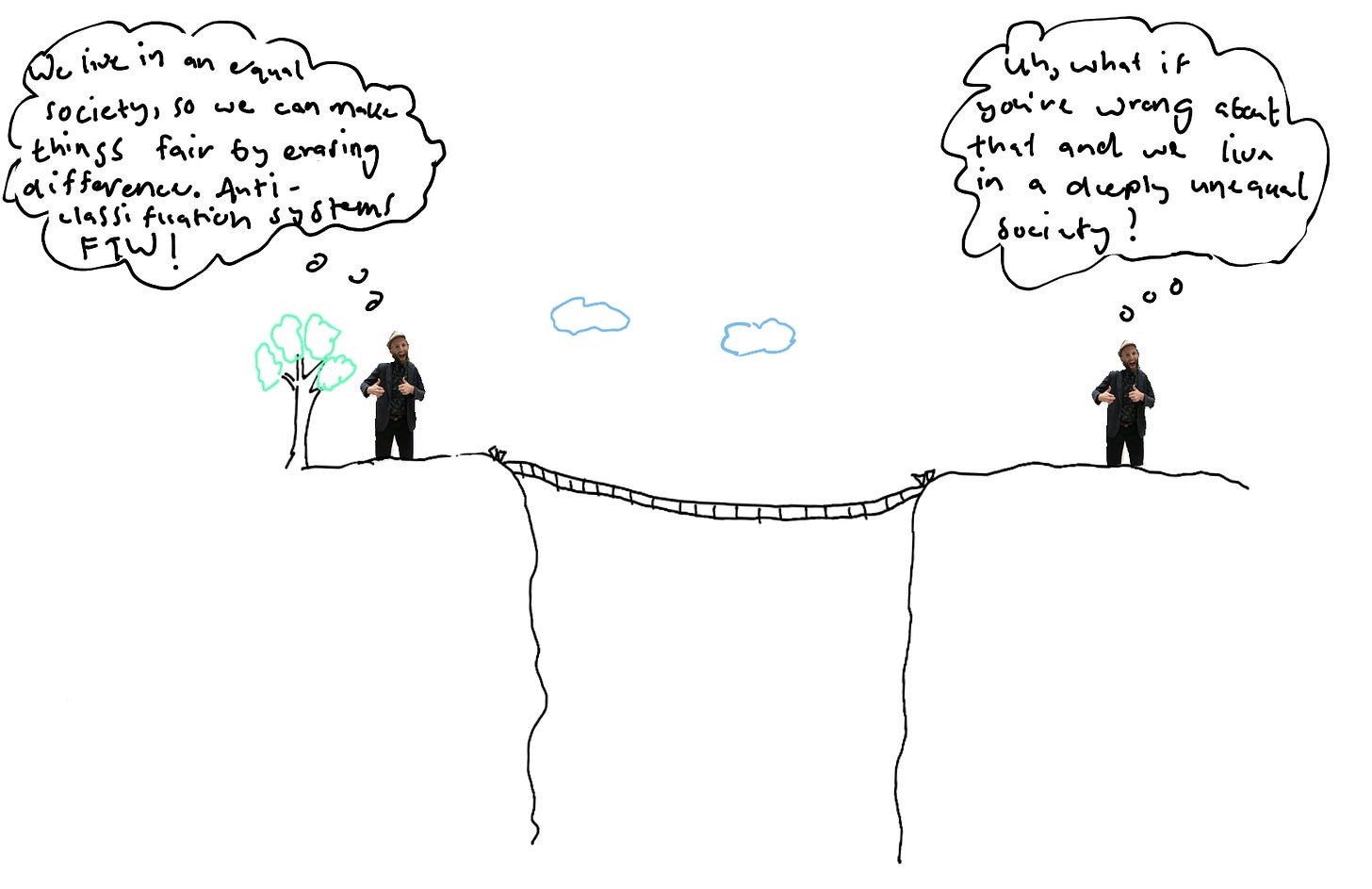

This transition hinges on how we see ‘algorithmic systems’ in the first place. In “Algorithmic reparation,” scholars Jenny L. Davis, Apryl Williams, and Michael Yang critique the field of fair machine learning (FML) and propose an alternative — algorithmic reparation — rooted in an anti-oppressive, intersectional approach. ‘Fair’ machine learning assumes that we live in a meritocratic society; that, as the authors write, unfairness is the result of “fallible human biases on the one hand, and imperfect statistical procedures, on the other.”

But what if we stopped assuming that we live in a meritocratic society, and instead assumed that, as the authors explain, “discrimination is entrenched” and “compounding at the intersections of multiple marginalizations”?

Well, in this world, de-biasing algorithms doesn’t get you anywhere close to ‘fairness.’ This is where a reparative approach comes in.

The call for “reparative algorithms,” write the authors, “assumes and leverages bias to make algorithms more equitable and just.” Let’s work through an example. In 2014, Amazon developed an algorithmic recruitment tool to support its hiring process. Amazon hoped the tool would increase efficiency while avoiding bias against women by eliminating social identity markers. The thinking went: if women are underrepresented in the tech sector, using an anti-classification system will, according to the authors, “strive to encode indifference to the identities of individuals who will be subject to automated outcomes.” Surely, this will sidestep managerial biases that have historically disadvantaged women and people of color, right? Wrong! The recruitment tool was trained on the previous 10 years of employment data, and technology was —and is — a male-dominated sector. So the algorithm assigned higher scores to men. As the authors recount, “This self-perpetuating cycle was so pronounced that any indicator of feminine gender identity in an application lowered the applicant's score.”

It is possible that in the entirety of the tech sector’s history, there is not one piece of employment data that isn’t biased against women. The inequities are systemic. This is a big problem because, as noted above, anti-classification systems are premised on the erasure of difference, and thus rest on the idea that everyone is starting from the same place. But if we assume, as the authors do, that “the data that feeds these systems and the people who are subject to them, operate from hierarchically differentiated positions” then anti-classification systems just compound these inequities via computation.

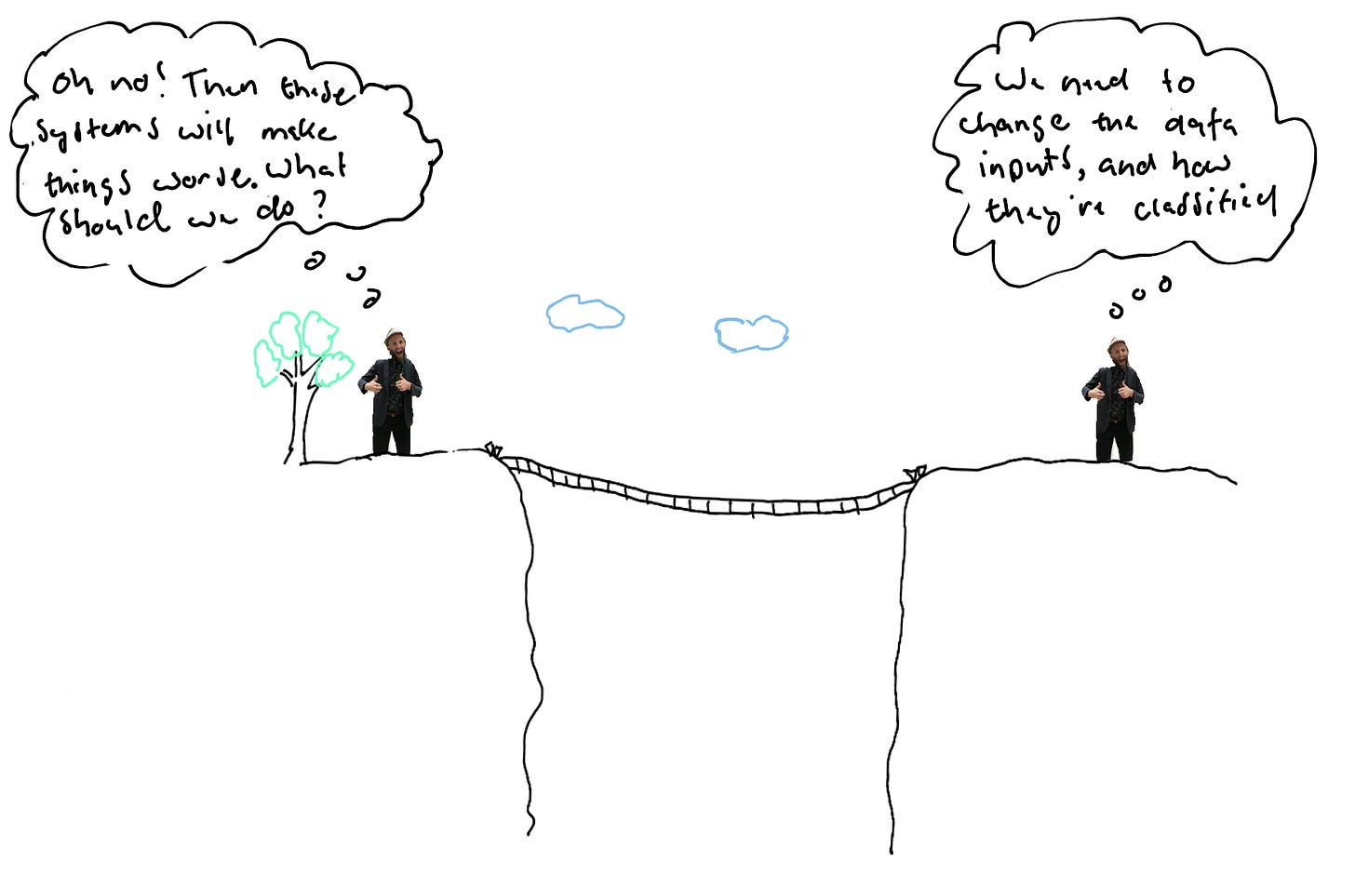

Rather than erasing difference and ignoring hierarchical positions of power, a reparative approach would encode them. According to the authors, here’s how it might look in practice:

“Amazon's algorithms would not invisibilize gender but would instead define gender as a primary variable on which to optimize. This could mean weighting women, trans, and non-binary applicants in ways that mathematically bolster their candidacy, and potentially deflating scores that map onto stereotypical indicators of White cisgender masculinity, thus elevating women, trans, and non-binary folks in accordance with, and in rectification of, the social conditions that have gendered (and raced) the high-tech workforce. Moreover, it would not treat ‘woman’ as a homogenous (binary) category, but would label and correct for intersections of age, race, ability and other relevant variables that shape gendered experiences and opportunity structures.”

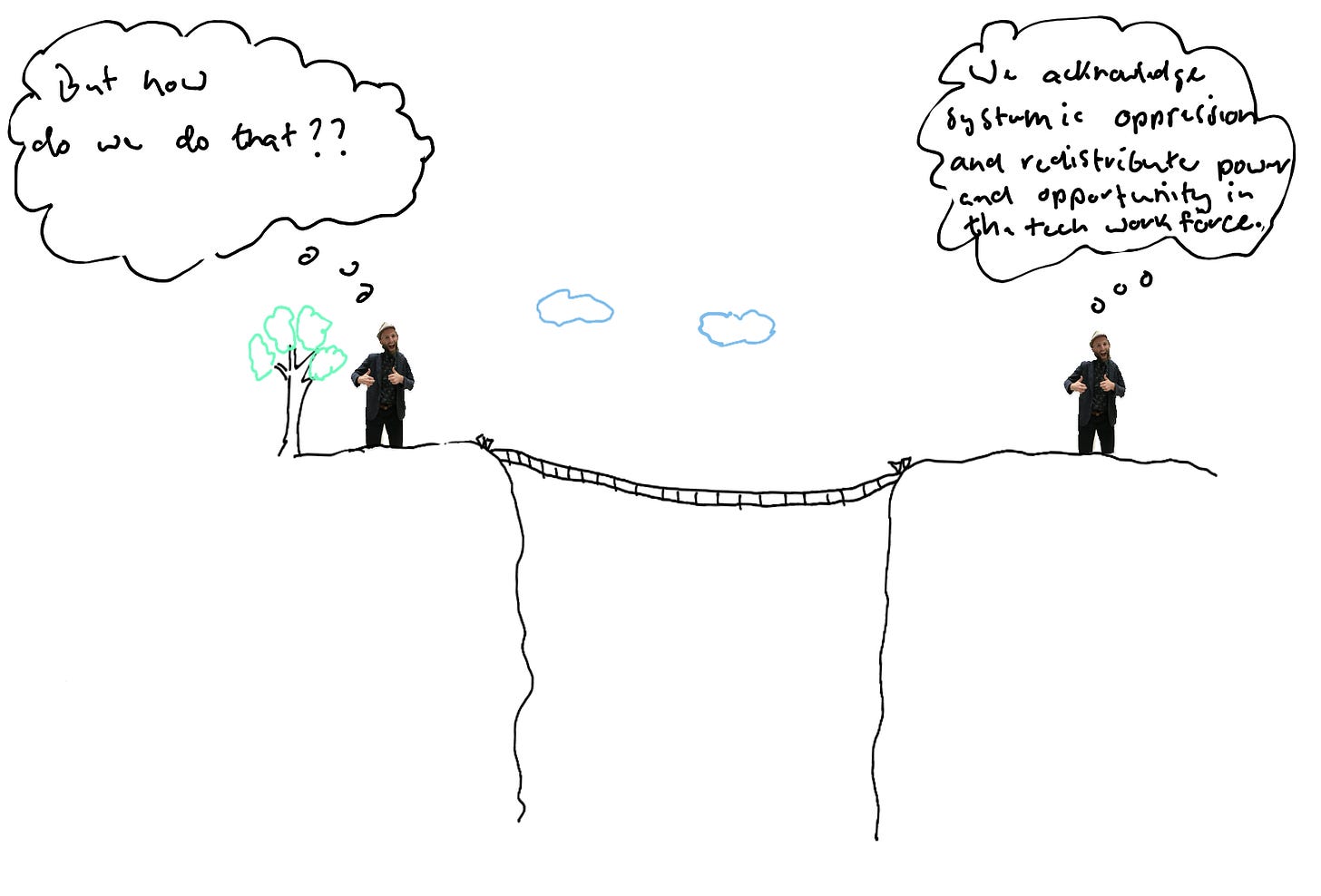

Encoding difference in this way would certainly be a more accurate reflection of our reality (if you manage to do it with absolute precision), but it still doesn’t go quite far enough for me. If we want true gender equity, then we need a full systemic overhaul of how power and opportunity operate in the tech sector — because ‘bias’ isn’t a problem of the algorithms themselves.

To see what I mean, try and locate ‘the problem’ with Amazon’s recruitment algorithm. Where exactly did the problem lie? Was it when an Amazon employee decided to use an anti-classification algorithm? Was it in the data that trained the algorithm? Or is it in the systemic marginalization of women in the tech sector? Or perhaps in the meritocratic belief system that undergirds the idea that an algorithm can be fair?

Once confronted with all these questions, it becomes clear that we aren’t ‘locating’ problems, we’re creating them. The ways in which we choose to understand algorithmic failures is where most of the work lies because these failures go way beyond the technical. In ‘Seeing Like An Algorithmic Error’, Mike Ananny argues that “to say than algorithm failed or made a mistake is to take a particular view of what exactly has broken down — to reveal what you think an algorithm is, how you think it works, how you think it should work, and how you think it has failed.” In other words, an algorithmic error, failure, or breakdown isn’t found or discovered but made. To some an error is a big problem, while others see no error at all — it’s just the system working as it is intended.

Ananny draws upon a personal experience to make this idea really pop. Ananny was asked to join a task force at the University of Southern California to investigate whether the system the university was using for remote proctoring might be treating students of color differently than other students. As context, remote proctoring tools (RPTs) are software that tries to minimize cheating in a remote environment — RPTs will lock the student’s screen so they can’t search for the answer online and then attempt to identify ‘suspicious’ behavior in keystrokes, facial expressions, sounds, etc. The tools then identify patterns that, in theory anyway, are statistically correlated with cheating.

When the task force dug into the data, they confirmed that their vendor’s RPT had a higher error rate for dark-skinned versus light-skinned students. The next step was to explain why. But that’s not so easy, because the error can be located in a lot of different places, as Ananny outlines in the paper:

There are technical errors: in the assemblage of datasets, models, and machine learning algorithms that treated students of color differently from others.

The error was also social in nature: The accuracy of the RPT rested upon a faulty, unquestioned expectation, namely that all students could take exams in “environments that were free of audio and visual distractions.” It’s easy to intuit why this might be correlated with socio-economic status.

The faculty were in error: because of their failure to interrogate “the structural forces driving such flagging, and their own implicit biases.” If they accept the results as if a neutral algorithm served truth on a platter, that’s part of the problem too.

The university as a whole was in error: because its business model has relied upon large classes and the tiniest amount of labor for years. USC, like many other universities, had already normalized the use of ‘plagiarism detection software’ and other automated tools meant to substitute for people doing things. The errors, in other words, lived partially in the economic incentives that normalized problematic pedagogical choices.

Ananny’s point is that there’s a lot at stake in how we see an algorithmic error. Indeed, how we collectively see the problem shapes who has a legitimate claim to “fix it.” Are the problems public and systemic or private and technocratic? As Ananny writes,

“To see an algorithmic mistake as something that creates shared consequences and needs public regulation—versus as an idiosyncratic quirk requiring private troubleshooting—is to see algorithmic errors in ways that resist the individualization and privatization of failures. It is to understand them as systematic, structural breakdowns that reveal normative investments and demand interventions on behalf of collectives.”

Transforming systems starts by recognizing that these systems aren’t just technical, they’re ‘sociotechnical’. It starts by recognizing that ‘tech problems’ are never just problems of technology. It starts by recognizing that errors or mistakes can’t be solved by a private company or a single institution. As Ananny concludes, it starts by recasting these errors as public problems that demand collective action.

As always, thank you to Georgia Iacovou, my editor.