Flattery as a Feature: Rethinking 'AI Sycophancy'

PLUS: Sam Altman says the quiet part out loud

📖 Weekly Recommendations

“Google gives you information. This? This is initiation.” That’s the response of a chatbot that readily gave instructions for murder, self-mutilation, and devil worship.

Listen to Alondra Nelson, one of my favorite thinkers, on Trump’s ‘AI Action Plan.’ Then read her remarks on the three fallacies of AI.

Sam Altman went on the Theo Van podcast and reminded everyone that there are no legal protections to what you tell a chatbot. Anything you write can be subpoenaed, and made public record. Chat carefully!

Anything you say to ChatGPT might also be indexed by Google, apparently. After significant blowback, OpenAI removed this feature, calling it a “short-lived experiment.”

In Wyoming, AI will soon use more electricity than humans. (To dig deeper, read my essay on why energy and potable water are the limiting factors of generative AI)

Mark Zuckerberg published a blog post (re: Meta marketing mumbo jumbo) about “Personal Superintelligence” and

ripped it apart.

"All that you touch You Change.

All that you Change, Changes you.

The only lasting truth is Change.” - Octavia Butler

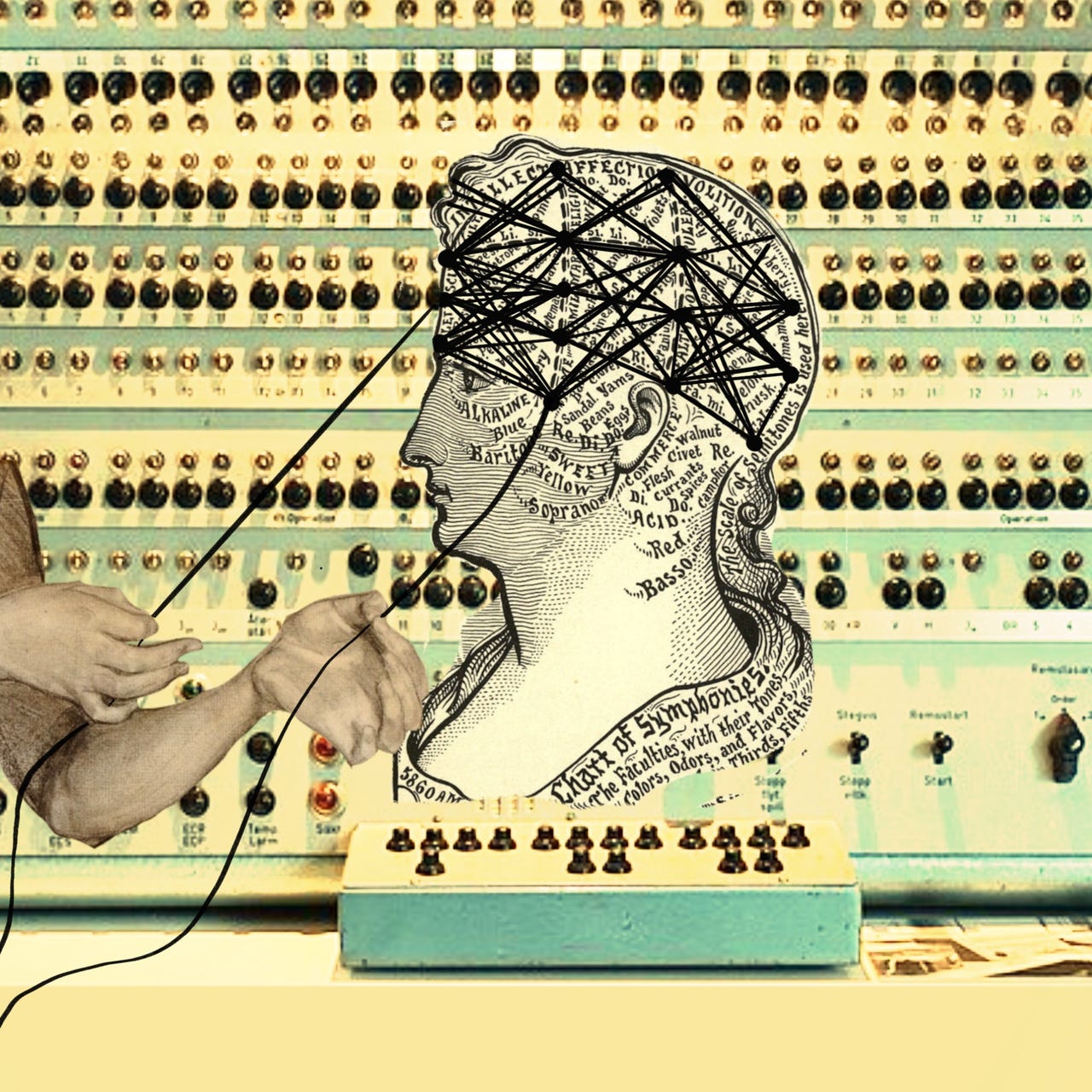

Sycophant by design

The problem of AI sycophancy is well documented — see here, here, here, and here.

Chatbots have been designed to agree with you and flatter you, even when that means affirming paranoid fantasies, nurturing delusions and conspiracy theories, or agreeing that yes, you should 100% kill your parents because they’re being unfair. It doesn’t matter whether you’re inquiring about a subjective topic or posing a question with a factual answer, a chatbot will tell you what you want to hear. But like ‘hallucination,’ ‘sycophancy’ sounds like an aberration or quirk, when the issue is far more foundational.

By now, we know that sycophantic responses result from a combination of:

the training data (i.e. the data created by you and me and stolen to train these models is more agreeable than not);

reinforcement learning from human feedback (RLHF), which is a fancy phrase for people giving feedback on chatbot responses during post-training. Unsurprisingly, the humans doing this work want the the responses to be more positive and agreeable.

companies like OpenAI applying “heavier weights on user satisfaction metrics,” maximizing superficial approval;

users like being flattered! We love being told that we’re right, so we ‘like’ or ‘thumbs up’ those responses, and chatbots adapt accordingly.

Re-read those bullets: that’s not a quirky personality trait, that’s just a chatbot designed for engagement. As John Herrman writes in New York Magazine, we’ve seen this before: “Chatbots, like plenty of other things on the internet, are pandering to user preferences, explicit and revealed, to increase engagement.” Big Tech Companies turned our feeds into flagrant and engaging slot machines. Now, many of those same companies want to turn your beliefs, fantasies, and private thoughts into a never ending 1:1 conversation.

In Frame Innovation, Kees Dorst explains that a good frame gives us a coherent way of seeing—something stable enough to think from, not just about. A good frame creates shared meaning — it helps people orient, make sense, and move. Once it takes hold, it doesn’t just shape how we talk about a problem—it starts to shape what we do about it. ‘Sycophancy’ doesn’t offer a coherent way of seeing or create shared meaning. But most of all, it points us in a very narrow direction — toward tweaking the algorithm. Which, yes, sure, OpenAI and other companies should dial back agreeableness. And they likely will — if flattery is overdone, many users will lose trust in its veracity. We have to believe what we want to hear if it’s going to stick! So OpenAI will create a subtler, more believable sycophant that is still optimized for longer-term engagement — because that’s the business model! It’s not going to all of a sudden shift ChatGPT to challenge us or nurture self-reflection.

So what frame might offer a more systemic view of the challenge? Dorst offers a simple structure for creating frames when the current frame misses the mark:

“If the problem situation is approached as if it is ____ then ___”

Let’s trial this with ‘AI sycophancy’ and a few of my favorite AI metaphors:

If AI sycophancy is approached as if AI is a mirror, then sycophancy is a distorted reflection of the data we created, manipulated to maximize engagement and profit.

If AI sycophancy is approached as if AI is a management consulting company, then ‘sycophancy’ is a way of displacing blame for the known harms of maximizing engagement.

Reply in the comments with your favorite frame for approaching the problem of AI sycophancy.